Generative AI is revolutionizing cybercrime. To combat this, organizations must adopt advanced security measures, enhance authentication, train employees, and collaborate across the industry.

The digital revolution has transformed the financial landscape, offering unprecedented convenience and accessibility. However, this progress has also created new vulnerabilities, exploited by increasingly sophisticated cybercriminals. The emergence of generative AI represents a paradigm shift in this ongoing battle, creating both powerful new tools for criminals and complex challenges for financial institutions. Tools like ChatGPT, DALL-E 2, and Stable Diffusion, while demonstrating remarkable creative potential, have simultaneously opened up unprecedented avenues for fraud and cybercrime. This presents a significant challenge for financial institutions in both the UK and US, demanding a reassessment of existing security protocols and a proactive approach to mitigating these sophisticated threats. This is not merely an incremental evolution of cybercrime; it’s a paradigm shift, requiring a strategic and comprehensive response.

Sophisticated phishing attacks

Traditional phishing attacks, often characterized by generic templates and easily detectable errors, are becoming increasingly obsolete. Generative AI empowers malicious actors to craft highly personalized and convincing emails, leveraging publicly available data to tailor messages to specific individuals. Imagine receiving an email that not only addresses you by name but also references specific details about your professional activities or personal interests. Perhaps it mentions a recent conference you attended, a project you’re leading, or even a family event gleaned from social media. This level of personalization significantly increases the likelihood of a successful attack, bypassing traditional spam filters and exploiting human psychology. The recipient is far more likely to trust an email that appears to be genuinely relevant to their life, making them more susceptible to manipulation.

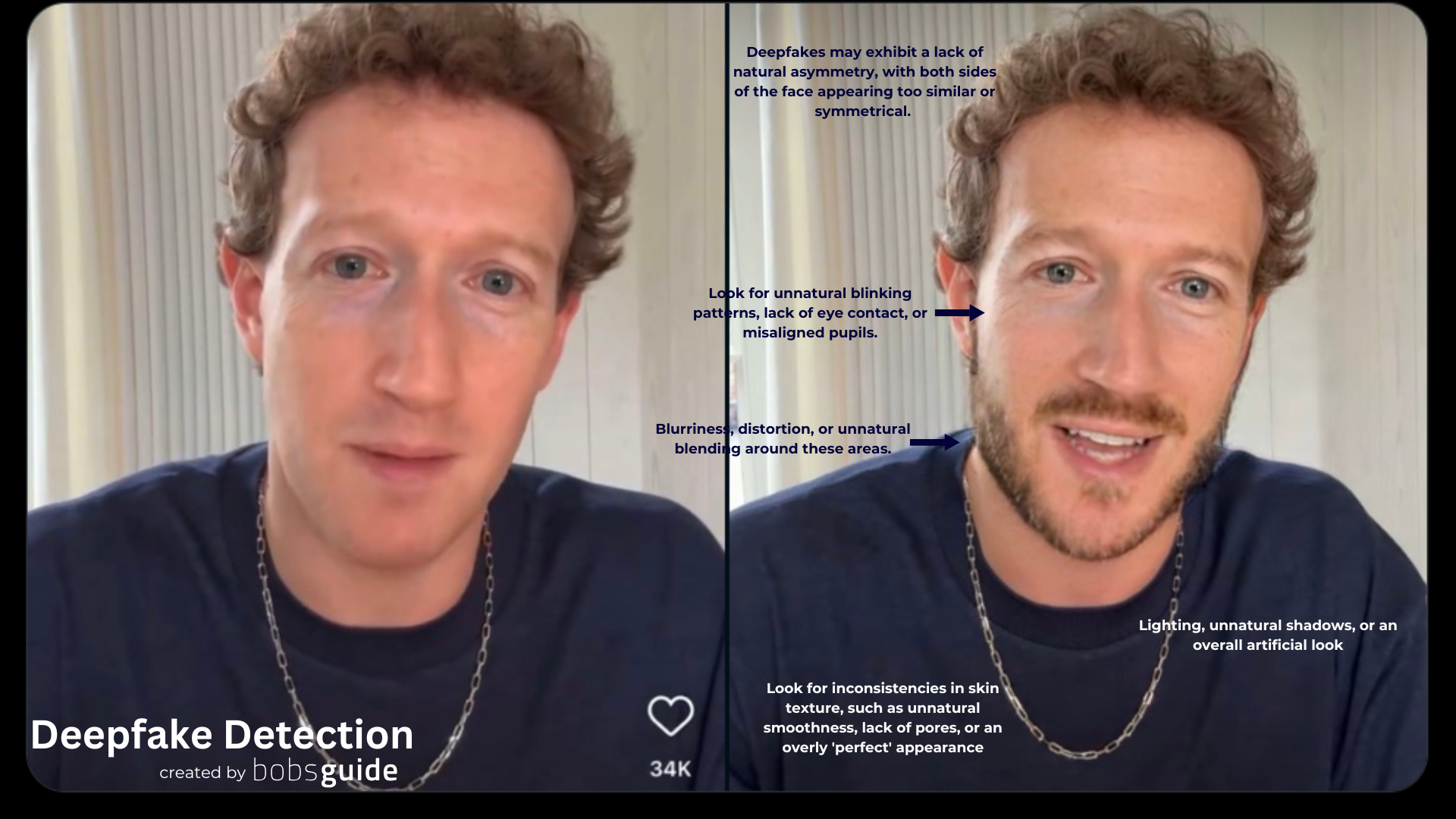

Deepfake scams

The ability to create realistic deepfake videos and audio recordings poses a profound threat to trust and authentication. Generative AI can be used to impersonate executives, colleagues, or even family members, manipulating individuals into divulging sensitive information or authorizing fraudulent transactions. Consider the potential impact of a deepfake video depicting a senior executive endorsing a fraudulent investment scheme. The realism of these deepfakes can make them incredibly difficult to detect, even for trained professionals. The implications extend beyond simple financial fraud; deepfakes can also be used to spread disinformation, manipulate markets, and damage reputations.

Automated malware creation

Beyond social engineering, generative AI can also be used to automate the creation of sophisticated malware. This capability allows malicious actors to develop and deploy malicious code at an unprecedented scale and speed. The potential for a surge in polymorphic malware, capable of constantly evolving to evade detection, is a serious concern. This dynamic adaptation poses a significant challenge for traditional antivirus software, which often relies on recognizing known signatures. AI-generated malware can mutate and adapt in real-time, making it significantly harder for static security solutions to identify and neutralize it.

Synthetic identities

The creation of synthetic identities, a persistent challenge for financial institutions, is further amplified by generative AI. Malicious actors can leverage AI to fabricate entirely new identities, complete with seemingly legitimate credentials, for the purpose of opening fraudulent accounts, applying for loans, or conducting other illicit activities. These synthetic identities, blending real and fabricated data, are notoriously difficult to detect, posing a significant threat to Know Your Customer (KYC) and Anti-Money Laundering (AML) compliance. The ability to generate realistic-looking documentation, such as fake passports or driver’s licenses, further complicates the challenge.

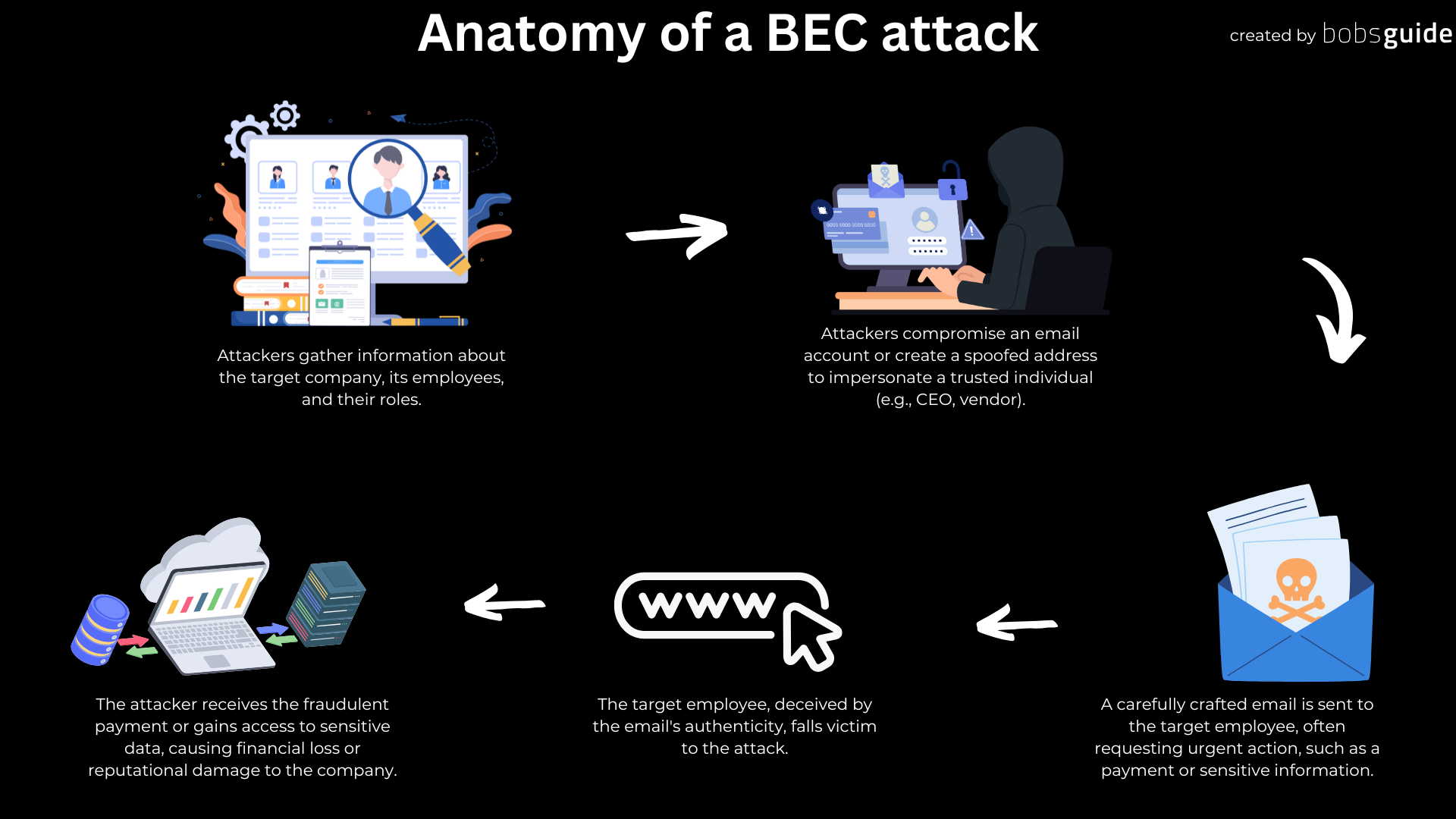

Business Email Compromise (BEC) Attacks

Business Email Compromise attacks, a common and costly form of cybercrime, are also being enhanced by generative AI. Malicious actors can use AI to craft highly convincing BEC emails, impersonating senior executives or trusted partners to trick employees into making fraudulent wire transfers. The increased sophistication and personalization of these AI-generated emails make them exceptionally difficult to detect, even for experienced professionals. The emails can be crafted to mirror the writing style and communication patterns of the impersonated individual, making them appear even more authentic.

Mitigating the risks: a multi-layered approach

Combating the evolving landscape of AI-powered cybercrime requires a multi-faceted strategy that incorporates advanced technologies, robust employee training, and industry-wide collaboration. Here’s a deeper dive into some key approaches:

Advanced threat detection:

Traditional security solutions often rely on signature-based detection, which involves identifying known malware signatures or patterns. However, AI-generated malware can easily evade these methods by constantly evolving and changing its characteristics. To counter this, financial institutions must invest in advanced threat detection systems that go beyond simple signature matching.

These systems should leverage AI and machine learning algorithms to analyze vast amounts of data, identifying anomalies and suspicious patterns that might indicate an AI-generated attack. This includes analyzing not only the content of communications, such as emails or messages, but also the context, behavior, and historical data associated with them. For example, a system might flag an email that, while seemingly legitimate in content, originates from an unusual location or is sent at an unusual time for the sender.

Furthermore, these advanced threat detection systems should be capable of learning and adapting over time. By continuously analyzing new data and identifying emerging threats, they can become more effective at detecting and preventing AI-powered attacks. This requires a proactive approach to threat intelligence, constantly monitoring the evolving landscape of cybercrime and updating detection models accordingly.

Enhanced authentication:

Authentication, the process of verifying user identities, is a critical line of defense against cybercrime. Traditional methods, such as passwords and security questions, are increasingly vulnerable to AI-powered attacks. Therefore, financial institutions must strengthen their authentication protocols by adopting multi-factor authentication (MFA) and exploring advanced biometric authentication methods.

MFA requires users to provide multiple factors of authentication, such as something they know (password), something they have (security token), and something they are (biometric data). This makes it significantly more difficult for attackers to gain unauthorized access, even if they have compromised one factor of authentication.

Biometric authentication, particularly behavioral biometrics, offers an additional layer of security. Behavioral biometrics analyzes patterns in how users interact with their devices, such as typing speed, mouse movements, and scrolling patterns. This creates a unique user profile that can be used to detect anomalies and prevent unauthorized access, even if credentials are compromised.

Employee training:

While technology plays a crucial role in cybersecurity, the human element remains a critical factor. Employees are often the first line of defense against cyberattacks, and they need to be equipped with the knowledge and skills to identify and respond to AI-powered threats.

Employee training programs must be updated to address the specific risks posed by AI-generated scams. Employees need to be educated on how to identify deepfakes, personalized phishing attempts, and other AI-driven attacks. This training should include practical exercises and simulations to help them recognize and respond to these sophisticated threats.

Regular security awareness training, tailored to the evolving threat landscape, is essential. This training should not only focus on technical aspects but also on human psychology and social engineering tactics. By understanding how attackers exploit human vulnerabilities, employees can be more vigilant and less susceptible to manipulation.

Collaboration and information sharing:

The fight against AI-powered cybercrime cannot be won in isolation. Collaboration and information sharing within the financial industry are crucial for staying ahead of these evolving threats. Organizations like the Financial Services Information Sharing and Analysis Center (FS-ISAC) play a vital role in facilitating this collaboration, enabling financial institutions to share threat intelligence, best practices, and incident response strategies.

This collaborative approach allows the industry to collectively defend against emerging threats and share knowledge about new attack techniques. By pooling resources and expertise, financial institutions can create a more resilient ecosystem and better protect themselves and their customers.

Ethical AI development:

As financial institutions increasingly adopt AI technologies for various purposes, it is essential to prioritize ethical AI development and deployment. This includes ensuring transparency, accountability, and fairness in the use of AI systems, as well as mitigating the risks of bias and discrimination.

Financial institutions need to be mindful of the potential ethical implications of their AI systems and implement safeguards to prevent unintended consequences. This includes carefully considering the data used to train AI models, ensuring that it is representative and unbiased. It also involves establishing clear guidelines for the use of AI in decision-making processes, ensuring that human oversight and accountability are maintained.

The regulatory landscape:

Regulators in both the UK and the US are actively addressing the implications of AI for financial services. Financial institutions must stay informed about evolving regulations and ensure their security practices are compliant. This includes understanding the specific requirements related to data privacy, algorithmic transparency, and accountability.

The future of AI in cybersecurity:

The landscape of AI-driven cybercrime is constantly evolving. Financial institutions must remain vigilant, continuously adapting their security strategies and investing in new technologies to stay ahead of the curve. This requires a commitment to ongoing research, innovation, and collaboration. The ability to anticipate and adapt to these evolving threats will be crucial for maintaining the security and integrity of the financial system.

By embracing this multi-layered approach and fostering a culture of cybersecurity awareness, financial institutions can navigate the challenges posed by generative AI and build a more secure future for themselves and their customers.

Embracing the challenge

Generative AI presents both a challenge and an opportunity. By understanding the risks, implementing robust security measures, fostering collaboration, and embracing ethical AI development, financial institutions can protect themselves and their customers from the growing threat of AI-powered fraud and cybercrime. The future of financial security depends on it. This is not just about reacting to the current threats; it’s about proactively building a more secure future for the financial industry.

Leave a Reply